Pedagogy Over Panic

How College Leaders are Intentionally Using AI to Protect Degrees and Student Learning

Pedagogy Over Panic

How College Leaders are Intentionally Using AI to Protect Degrees and Student Learning

Summary

It’s not too late to save university degrees from being overrun by AI models that offer unlimited opportunities for students to offload cognitive effort (so far without much risk of detection). Indeed, there are good reasons for colleges to encourage the use of AI on terms that support pedagogy, ensure student learning, and guarantee students graduate with the critical thinking skills that are essential for employment and success in life.

What does pedagogically-sound AI look like? One of the few places to find it today is in the student learning support platform built by Australian edtech Studiosity, technology leader and pioneer for two decades in institutions around the world. According to Professor Judyth Sachs, Chief Academic Officer at Studiosity, “pedagogically-sound “AI for Learning” requires that any solution be built on an established evidence base, provide educators with full and transparent access to student activities, and that all functionality be engineered to foster development of students’ higher-order thinking skills, rather than just taking short cuts that look impressive but do not reflect authentic learning.”

As university leaders grapple with the simultaneous challenges of financial pressure and reputational risk, they are increasingly making a thoughtful pivot away from ‘technology for technology’s sake’. Instead, they are looking to proven solutions like Studiosity that are built on “AI for Learning” foundations and that will ensure they stay true to their mission without sacrificing autonomy and integrity to unconstrained general-purpose LLMs.

Summary

It’s not too late to save university degrees from being overrun by AI models that offer unlimited opportunities for students to offload cognitive effort (so far without much risk of detection). Indeed, there are good reasons for colleges to encourage the use of AI on terms that support pedagogy, ensure student learning, and guarantee students graduate with the critical thinking skills that are essential for employment and success in life.

What does pedagogically-sound AI look like? One of the few places to find it today is in the student learning support platform built by Australian edtech Studiosity, technology leader and pioneer for two decades in institutions around the world. According to Professor Judyth Sachs, Chief Academic Officer at Studiosity, “pedagogically-sound “AI for Learning” requires that any solution be built on an established evidence base, provide educators with full and transparent access to student activities, and that all functionality be engineered to foster development of students’ higher-order thinking skills, rather than just taking short cuts that look impressive but do not reflect authentic learning.”

As university leaders grapple with the simultaneous challenges of financial pressure and reputational risk, they are increasingly making a thoughtful pivot away from ‘technology for technology’s sake’. Instead, they are looking to proven solutions like Studiosity that are built on “AI for Learning” foundations and that will ensure they stay true to their mission without sacrificing autonomy and integrity to unconstrained general-purpose LLMs.

"Pedagogically-sound “AI for Learning” requires that any solution be built on an established evidence base, provide educators with full and transparent access to student activities, and that all functionality be engineered to foster development of students’ higher-order thinking skills."

Degrees are losing value in the public’s mind, as some in the education sector appear paralyzed by the two paths forward:

- One is a high-risk, no-action path that relies on leaving students to general or consumer-revenue-driven AI in institutions, where it acts as an tacitly-accepted vehicle for cognitive offloading (or ‘brain rot,’ or indeed ‘cheating,’ to use pre-2023 vernacular). This scenario largely falls into an “implement now, define the problem later” pattern of decision making, or: ‘technology first, pedagogy second.’

- Another path sees more college leaders quietly taking back control and protecting institutional value and reputation via pedagogy first, technology second, to take advantage of the real power - and biggest opportunity yet - for AI in higher education.

AI for Learning vs. AI for Productivity

The tension of AI in higher education has centered around the undeniable appeal of AI for Productivity, vs the compliance and ethics of AI for Learning. Unconstrained LLMs are built for speed, to do something faster and to outsource cognitive effort. However, when students use unconstrained AI in their college-specific learning, with the intent to finish tasks faster, they outsource authentic skills development. It’s clear they are also eroding the value of any accreditation (and its issuer) they seek to “earn”.

In contrast, AI for Learning is about having students engage with AI in a way that is transparent, compliant, and fit-for-purpose - solving a specific, urgent need. Real AI for Learning doesn’t give answers, retains a student’s voice (no auto option to “make more formal” “make this sound appealing to my professor” or even “accept all changes.”) Deploying AI strategically, defensibly, and ethically means adopting AI for Learning that promotes, enables, and ensures authentic writing and critical thinking development. This must go deeper than a promise of learning ‘in the brochure.’ Universities need the evidence to show for it.

Proof, not promises

Technology for technology’s sake has never been justifiable, especially now when colleges face retention goals, financial pressures, and now potentially existential reputational risk. AI for Learning is the antidote to post-2022 AI hype in degree spaces. Leading the ‘AI for Learning’ space, is Studiosity, whose approach of a “hard no” to corrections, automated text generation, and “accept all changes” means instead pedagogically-sound feedback, educator oversight, and peer interaction.

Functionally, Studiosity is an online learning support platform designed to provide human-centric, AI-powered feedback at scale to university students. It helps universities implement a strategic approach to AI that follows ethical best practice for feedback, including educator oversight, data protection policies, and increasing (not decreasing) connection to peers alongside genAI. Every piece of the platform supports teaching and learning, rather than enabling cognitive offloading.

So far, there is a compelling and evidence-based case for this strategy:

- The University of Toronto found that students with access to Studiosity were less likely to use AI to generate writing and more likely to adhere to integrity policies.

- A 2025 University of Bedfordshire study confirmed: “Grades improved by 11.1% for first and third year students, with an even greater increase of 21.5% for second year students.”

The underlying pedagogy also pays off, financially:

- The Nous Group conducted a meta-analysis of colleges using Studiosity and conservatively estimated a 4.4x return on investment in the form of retained students.

Students’ backgrounds and degrees, even in disparate regions around the world, show consistent outcomes for engagement and confidence:

- Top 100 Global University - University of Adelaide - found the following on Studiosity: “The findings of this report, which include improved analytical rigor and academic integrity, suggest the AI tool is a pedagogically viable option offering long-term learning opportunities.”

- Queensland University of Technology found, “Use of the service was associated with positive retention and progression outcomes.”

- 842% increase in participation at Plymouth Marjon University

- 91% felt more confident, at The Open University of Malaysia.

- 89% felt more confident, at Kathmandu University.

The New Path Forward: Authenticity and Student Agency

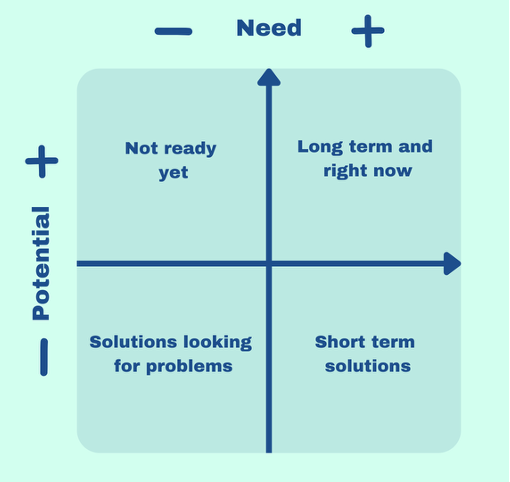

A shift is coming. Leon Furze, a researcher at Deakin University describes an education technology ‘Needs / Potential’ matrix that provides a useful backdrop for reflection at this moment in time. The matrix mirrors what some college leaders recognized early in the GPT era, and what many are discovering now.

- Much of generative AI in education spaces falls into Low Need - Low Potential. This technology might be implemented, even celebrated, but it’s still looking for a specific problem to solve.

- Some AI solutions solve a problem with only interim value; notably, AI for detection. In this case, tools rely on pre-GPT student behavior and punitive-only solutions without a view to holistic prevention.

- Sometimes technology needs to be explored to determine best fit, and higher education does exploration and testing better than most. But current students should not be guinea pigs - nor should statutory duties to data, human connection, and valid accreditation be second to this research.

That’s why the focus has been increasingly shifting toward AI tools for students that are High Need and High Future Potential. These are AI tools that serve the core mission of student learning, are fit-for-purpose, intentionally used, and evidence-based.

Leaders might frame AI adoption decisions with a matrix, to audit new technologies for purpose. Most leaders are also now looking to policies and best practice, e.g. “The safe and effective use of Al in education” from the UK Department of Education (June 2025) or UNESCO’s “Guidance for generative AI in education and research.” (September 2023.) Image from: Furze, L. (2023, November 15). AI policy toolkit: The need/potential matrix. https://leonfurze.com/2023/11/15/ai-policy-toolkit-the-need-potential-matrix/

Leaders might frame AI adoption decisions with a matrix, to audit new technologies for purpose. Most leaders are also now looking to policies and best practice, e.g. “The safe and effective use of Al in education” from the UK Department of Education (June 2025) or UNESCO’s “Guidance for generative AI in education and research.” (September 2023.) Image from: Furze, L. (2023, November 15). AI policy toolkit: The need/potential matrix. https://leonfurze.com/2023/11/15/ai-policy-toolkit-the-need-potential-matrix/

“Colleges want students to be graduating with a strong set of higher order cognitive skills, a strong sense of who they are, and how to think for themselves. Society and employers want this too. Demand it, in fact.”

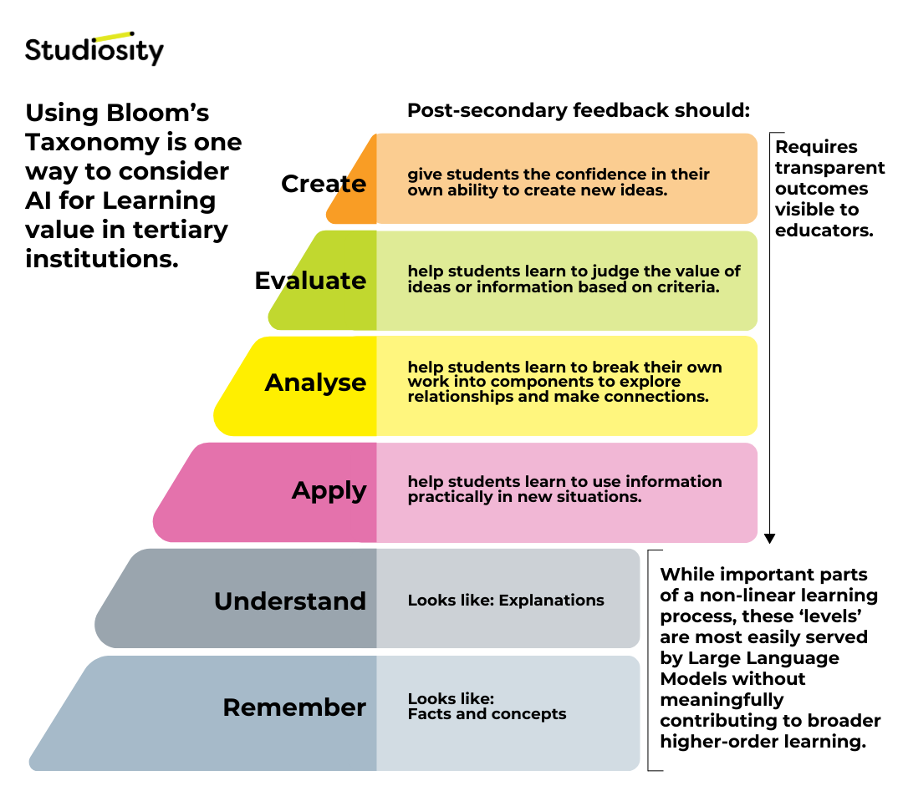

Bloom's Taxonomy is one way to show how AI for Learning should provide post-secondary feedback that moves beyond basic understanding and recall delivered by non-education AI. Institutional leaders can ensure AI implementations support and provide evidence for students’ development of higher-order skills like applying, analysing, evaluating, and creating. Bloom, B. S. (1956). Taxonomy of educational objectives, handbook I: The cognitive domain. New York: David McKay Co Inc.

Bloom's Taxonomy is one way to show how AI for Learning should provide post-secondary feedback that moves beyond basic understanding and recall delivered by non-education AI. Institutional leaders can ensure AI implementations support and provide evidence for students’ development of higher-order skills like applying, analysing, evaluating, and creating. Bloom, B. S. (1956). Taxonomy of educational objectives, handbook I: The cognitive domain. New York: David McKay Co Inc.

As Studiosity CEO Mike Larsen reflects, “Colleges want students to be graduating with a strong set of higher order cognitive skills, a strong sense of who they are, and how to think for themselves. Society and employers want this too. Demand it, in fact.”

“College leaders and educators are moving toward AI tools that improve more than just the bottom rungs of Bloom’s learning hierarchy - that is, beyond basic recall and understanding as an end game. Robust AI for Learning will help develop evaluation and analysis skills, for all students equally. All of this can be powered, enabled - indeed accelerated - using fit-for-purpose AI for Learning tools, like Studiosity.”

“It’s about empowering students, educators, and continuing a culture of integrity rather than doubling down on policing for cheating in isolation, or allowing an ‘anything goes’ approach.”

“Our university partners around the world have been those institutions taking a ‘hurry, slowly’ these past two years, pointing the compass at student learning to make rational decisions about mission-critical applications of AI like student support.”

As Dr. Tim Renick of Georgia State University shared at ASU+GSV Summit in San Diego this year, student success done well "creates both efficiencies and positive ROI." It's about taking tested approaches that are grounded in evidence.

“Fortunately,” reflects Mike Larsen, “due diligence is second nature to higher education institutions, educators, and researchers. True AI for Learning will continue to grow into 2026 as colleges move to intentionally protect what matters most - their students’ learning.”

See: studiosity.com

Research: studiosity.com/matrix

Bloom, B. S. (1956). Taxonomy of educational objectives, handbook I: The cognitive domain. New York: David McKay Co Inc.

Crossing, S. (2025, April 22). At the Cutting Edge of Student Success in Higher Ed, at ASU-GSV: Pragmatic practices, holistic support, and scalable tech beyond AI hype. Studiosity. https://www.studiosity.com/blog/cutting-edge-student-success-asu-gsv-2025

Danielson, K. (2025). Supporting student success and wellbeing: Equitable access to support tools for diverse circumstances, University of Toronto. Presented at Canadian Association of College & University Student Services CACUSS 2025, 10 June 2025. In-person.

Department for Education. (2025). Generative artificial intelligence (AI) in education. GOV.UK. https://www.gov.uk/government/publications/generative-artificial-intelligence-in-education/generative-artificial-intelligence-ai-in-education

Dhakal, R. K., & Parajuli, A. (2025, May). Usage and impact of Studiosity's Writing Feedback+ platform: A case of Kathmandu University. Kathmandu University School of Education.

Furze, L. (2023, November 15). AI policy toolkit: The need/potential matrix.https://leonfurze.com/2023/11/15/ai-policy-toolkit-the-need-potential-matrix/

Lee, D (2024). "Meaningful approaches to Learning and Teaching in the Age of AI: 2024 Festival of Learning and Teaching, at The University of Adelaide."

LW, Anderson & DR, Krathwohl & PW, Airasian & KA, Cruikshank & Mayer, Richard & PR, Pintrich & Raths, J. & MC, Wittrock. (2001). A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom's Taxonomy of Educational Objectives.

Miao, F., & Holmes, W. (2023). Guidance for generative AI in education and research. UNESCO. https://www.unesco.org/en/articles/guidance-generative-ai-education-and-research

Pike, D. (2024). Students' perspectives of a study support (Studiosity) service at a University. Research in Learning Technology, 32. https://doi.org/10.25304/rlt.v32.3015

Sachs, J. (2024). Getting ‘AI for Learning’ right to sustainably grow student success. A global, multi-institutional case study, with Prof Judyth Sachs. Presented at QS EduData Summit 2025, 4 June 2024.

Studiosity. (n.d.). QUT case study. Retrieved August 28, 2025, from https://www.studiosity.com/case-study-qut

Subramaniam, N. (2025). Conclusion on AI-generated feedback systems [Presentation slides]. Open University of Malaysia.

This content was paid for and created by Studiosity. The editorial staff of The Chronicle had no role in its preparation. Find out more about paid content.